Data-driven 3D Local Features for Point Cloud Registration

Developing robust and accurate methods for aligning 3D point clouds by learning discriminative feature descriptors is a long standing endeavor. Point clouds, which represent the shape and geometry of objects in 3D space, are critical for applications like 3D reconstruction, autonomous navigation, and augmented reality. Traditional methods rely on handcrafted features, but data-driven approaches leverage machine learning, particularly deep learning, to extract local features from point clouds. These learned features capture complex patterns and are invariant to transformations such as rotation and scale, enabling more precise and efficient point cloud registration in challenging environments.

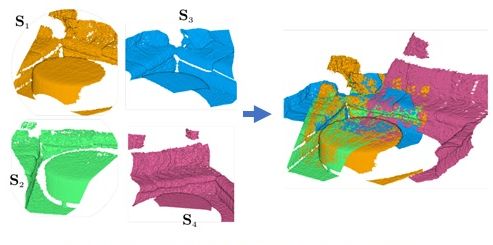

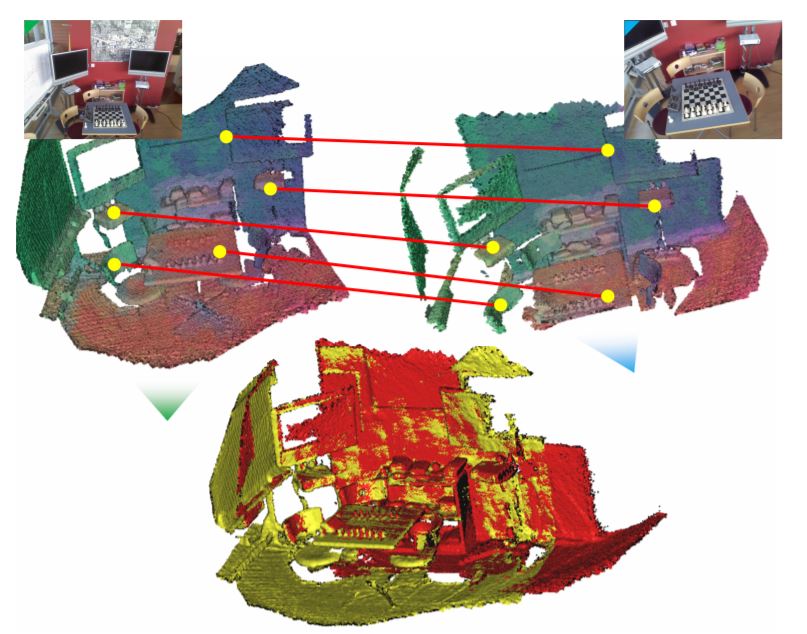

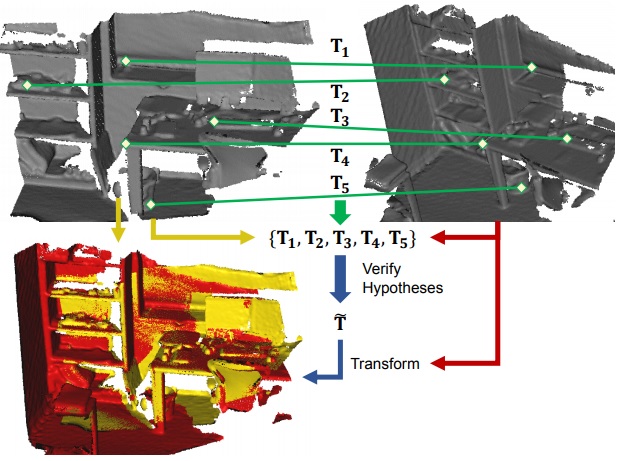

Through a series of publications, we have explored the development of 3D local features suitable for use in autonomous driving as well as robotics applications like bin picking and navigation. Our methods can also be used for reconstructing scenes by aligning information from different views or scans.

Related Publications

2022

2022

2021

2020

2019

2019

-

3D Local Features for Direct Pairwise RegistrationIn IEEE Conf. Computer Vision Pattern Recognition (CVPR), 2019

3D Local Features for Direct Pairwise RegistrationIn IEEE Conf. Computer Vision Pattern Recognition (CVPR), 2019

2018

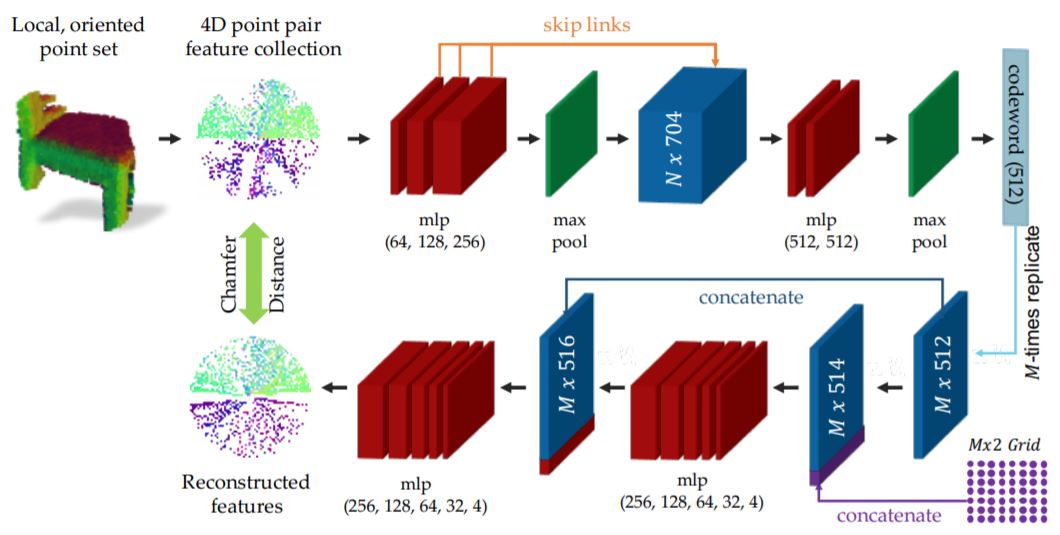

- PPF-FoldNet: Unsupervised Learning of Rotation Invariant 3D Local DescriptorsIn Eur. Conf. Computer Vision (ECCV), 2018

2018

- Ppfnet: Global context aware local features for robust 3d point matchingIn IEEE Conf. Computer Vision Pattern Recognition (CVPR), 2018